During VMware Explore 2022 Barcelona, I’ve been given a gift as a vExpert. You could read it in my previous article. NX6412 doesn’t support onboard NICs. We will need Custom ISO with USB Network Native Driver for ESXi. Because of problem using latest PowerCLI 13 release Nov 25, 2022 with export ISO. I decided to install Custom ISO ESXi 7u2e and than upgrade to ESXi 8.0 with depot zip.

How to prepare ESXi Custom ISO image 7U2e for NX6412 NUC?

Download these files:

Run those script to prepare Custom ISO image you could use PowerCLI 12.7 or 13.0: You could use create_custom_esxi_iso.ps1 as well.

Add-EsxSoftwareDepot .\VMware-ESXi-7.0U2e-19290878-depot.zipAdd-EsxSoftwareDepot .\ESXi702-VMKUSB-NIC-FLING-47140841-component-18150468.zipNew-EsxImageProfile -CloneProfile "ESXi-7.0U2e-19290878-standard" -name "ESXi-7.0U2e-19290878-USBNIC" -Vendor "vdan.cz"Add-EsxSoftwarePackage -ImageProfile "ESXi-7.0U2e-19290878-USBNIC" -SoftwarePackage "vmkusb-nic-fling"Export-ESXImageProfile -ImageProfile "ESXi-7.0U2e-19290878-USBNIC" -ExportToIso -filepath ESXi-7.0U2e-19290878-USBNIC.isoCreate bootable ESXi USB Flash Drive from ESXi-7.0U2e-19290878-USBNIC.iso. More info How to create a bootable ESXi Installer USB Flash Drive

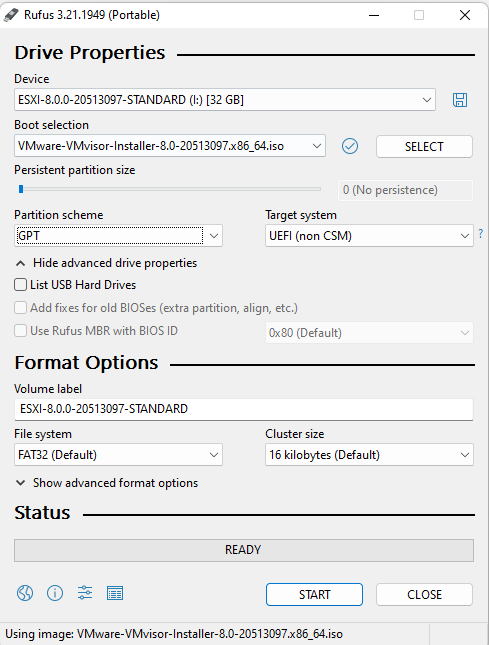

- For Custom ISO image is necessary select Write in ISO -> ESP mode

Install ESXi 7U2e and fix Persisting USB NIC Bindings

Currently there is a limitation in ESXi where USB NIC bindings are picked up much later in the boot process and to ensure settings are preserved upon a reboot, the following needs to be added to /etc/rc.local.d/local.sh based on your configurations.

vusb0_status=$(esxcli network nic get -n vusb0 | grep 'Link Status' | awk '{print $NF}')

count=0

while [[ $count -lt 20 && "${vusb0_status}" != "Up" ]]

do

sleep 10

count=$(( $count + 1 ))

vusb0_status=$(esxcli network nic get -n vusb0 | grep 'Link Status' | awk '{print $NF}')

done

esxcfg-vswitch -RPrepare ESXi Custom zip depot 8.0 for NX6412 NUC

Download these files:

Run those script to prepare Custom ISO image you could use 13.0. Problem with upgrade to PowerCLI you could fix with blog PowerCLI 13 update and installation hurdles on Windows:

Add-EsxSoftwareDepot .\VMware-ESXi-8.0-20513097-depot.zipAdd-EsxSoftwareDepot .\ESXi800-VMKUSB-NIC-FLING-61054763-component-20826251.zipNew-EsxImageProfile -CloneProfile "ESXi-8.0.0-20513097-standard" -name "ESXi-8.0.0-20513097-USBNIC" -Vendor "vdan.cz"Add-EsxSoftwarePackage -ImageProfile "ESXi-8.0.0-20513097-USBNIC" -SoftwarePackage "vmkusb-nic-fling"Export-ESXImageProfile -ImageProfile "ESXi-8.0.0-20513097-USBNIC" -ExportToBundle -filepath ESXi-8.0.0-20513097-USBNIC.zipUpgrade to ESXi 8.0

esxcli software profile update -d /vmfs/volumes/datastore1/_ISO/ESXi-8.0.0-20513097-USBNIC.zip -p ESXi-8.0.0-20513097-USBNIC

Hardware precheck of profile ESXi-8.0.0-20513097-USBNIC failed with warnings: <TPM_VERSION WARNING: TPM 1.2 device detected. Support for TPM version 1.2 is discontinued. Installation may proceed, but may cause the system to behave unexpectedly.>You could fix TPM_VERSION WARNING: Support for TPM version 1.2 is discontinued. With Apply –no-hardware-warning option to ignore the warnings and proceed with the transaction.

esxcli software profile update -d /vmfs/volumes/datastore1/_ISO/ESXi-8.0.0-20513097-USBNIC.zip -p ESXi-8.0.0-20513097-USBNIC --no-hardware-warning

Update Result

Message: The update completed successfully, but the system needs to be rebooted for the changes to be effective.

Reboot Required: true

VIBs Installed: VMW_bootbank_atlantic_1.0.3.0-10vmw.800.1.0.20513097, VMW_bootbank_bcm-mpi3_8.1.1.0.0.0-1vmw.800.1.0.20513097, VMW_bootbank_bfedac-esxio_0.1-1vmw.800.1.0.20513097, VMW_bootbank_bnxtnet_216.0.50.0-66vmw.800.1.0.20513097, VMW_bootbank_bnxtroce_216.0.58.0-27vmw.800.1.0.20513097, VMW_bootbank_brcmfcoe_12.0.1500.3-4vmw.800.1.0.20513097, VMW_bootbank_cndi-igc_1.2.9.0-1vmw.800.1.0.20513097, VMW_bootbank_dwi2c-esxio_0.1-2vmw.800.1.0.20513097, VMW_bootbank_dwi2c_0.1-2vmw.800.1.0.20513097, VMW_bootbank_elxiscsi_12.0.1200.0-10vmw.800.1.0.20513097, VMW_bootbank_elxnet_12.0.1250.0-8vmw.800.1.0.20513097, VMW_bootbank_i40en_1.11.2.5-1vmw.800.1.0.20513097, VMW_bootbank_iavmd_3.0.0.1010-5vmw.800.1.0.20513097, VMW_bootbank_icen_1.5.1.16-1vmw.800.1.0.20513097, VMW_bootbank_igbn_1.4.11.6-1vmw.800.1.0.20513097, VMW_bootbank_ionic-en-esxio_20.0.0-29vmw.800.1.0.20513097, VMW_bootbank_ionic-en_20.0.0-29vmw.800.1.0.20513097, VMW_bootbank_irdman_1.3.1.22-1vmw.800.1.0.20513097, VMW_bootbank_iser_1.1.0.2-1vmw.800.1.0.20513097, VMW_bootbank_ixgben_1.7.1.39-1vmw.800.1.0.20513097, VMW_bootbank_lpfc_14.0.635.3-14vmw.800.1.0.20513097, VMW_bootbank_lpnic_11.4.62.0-1vmw.800.1.0.20513097, VMW_bootbank_lsi-mr3_7.722.02.00-1vmw.800.1.0.20513097, VMW_bootbank_lsi-msgpt2_20.00.06.00-4vmw.800.1.0.20513097, VMW_bootbank_lsi-msgpt35_23.00.00.00-1vmw.800.1.0.20513097, VMW_bootbank_lsi-msgpt3_17.00.13.00-2vmw.800.1.0.20513097, VMW_bootbank_mlnx-bfbootctl-esxio_0.1-1vmw.800.1.0.20513097, VMW_bootbank_mnet-esxio_0.1-1vmw.800.1.0.20513097, VMW_bootbank_mtip32xx-native_3.9.8-1vmw.800.1.0.20513097, VMW_bootbank_ne1000_0.9.0-2vmw.800.1.0.20513097, VMW_bootbank_nenic_1.0.35.0-3vmw.800.1.0.20513097, VMW_bootbank_nfnic_5.0.0.35-3vmw.800.1.0.20513097, VMW_bootbank_nhpsa_70.0051.0.100-4vmw.800.1.0.20513097, VMW_bootbank_nmlx5-core-esxio_4.23.0.36-8vmw.800.1.0.20513097, VMW_bootbank_nmlx5-core_4.23.0.36-8vmw.800.1.0.20513097, VMW_bootbank_nmlx5-rdma-esxio_4.23.0.36-8vmw.800.1.0.20513097, VMW_bootbank_nmlx5-rdma_4.23.0.36-8vmw.800.1.0.20513097, VMW_bootbank_nmlxbf-gige-esxio_2.1-1vmw.800.1.0.20513097, VMW_bootbank_ntg3_4.1.8.0-4vmw.800.1.0.20513097, VMW_bootbank_nvme-pcie-esxio_1.2.4.1-1vmw.800.1.0.20513097, VMW_bootbank_nvme-pcie_1.2.4.1-1vmw.800.1.0.20513097, VMW_bootbank_nvmerdma_1.0.3.9-1vmw.800.1.0.20513097, VMW_bootbank_nvmetcp_1.0.1.2-1vmw.800.1.0.20513097, VMW_bootbank_nvmxnet3-ens-esxio_2.0.0.23-1vmw.800.1.0.20513097, VMW_bootbank_nvmxnet3-ens_2.0.0.23-1vmw.800.1.0.20513097, VMW_bootbank_nvmxnet3-esxio_2.0.0.31-1vmw.800.1.0.20513097, VMW_bootbank_nvmxnet3_2.0.0.31-1vmw.800.1.0.20513097, VMW_bootbank_penedac-esxio_0.1-1vmw.800.1.0.20513097, VMW_bootbank_pengpio-esxio_0.1-1vmw.800.1.0.20513097, VMW_bootbank_pensandoatlas_1.46.0.E.24.1.256-2vmw.800.1.0.20293628, VMW_bootbank_penspi-esxio_0.1-1vmw.800.1.0.20513097, VMW_bootbank_pvscsi-esxio_0.1-5vmw.800.1.0.20513097, VMW_bootbank_pvscsi_0.1-5vmw.800.1.0.20513097, VMW_bootbank_qcnic_1.0.15.0-22vmw.800.1.0.20513097, VMW_bootbank_qedentv_3.40.5.70-4vmw.800.1.0.20513097, VMW_bootbank_qedrntv_3.40.5.70-1vmw.800.1.0.20513097, VMW_bootbank_qfle3_1.0.67.0-30vmw.800.1.0.20513097, VMW_bootbank_qfle3f_1.0.51.0-28vmw.800.1.0.20513097, VMW_bootbank_qfle3i_1.0.15.0-20vmw.800.1.0.20513097, VMW_bootbank_qflge_1.1.0.11-1vmw.800.1.0.20513097, VMW_bootbank_rd1173-esxio_0.1-1vmw.800.1.0.20513097, VMW_bootbank_rdmahl_1.0.0-1vmw.800.1.0.20513097, VMW_bootbank_rste_2.0.2.0088-7vmw.800.1.0.20513097, VMW_bootbank_sfvmk_2.4.0.2010-13vmw.800.1.0.20513097, VMW_bootbank_smartpqi_80.4253.0.5000-2vmw.800.1.0.20513097, VMW_bootbank_spidev-esxio_0.1-1vmw.800.1.0.20513097, VMW_bootbank_vmkata_0.1-1vmw.800.1.0.20513097, VMW_bootbank_vmksdhci-esxio_1.0.2-2vmw.800.1.0.20513097, VMW_bootbank_vmksdhci_1.0.2-2vmw.800.1.0.20513097, VMW_bootbank_vmkusb-esxio_0.1-14vmw.800.1.0.20513097, VMW_bootbank_vmkusb-nic-fling_1.11-1vmw.800.1.20.61054763, VMW_bootbank_vmkusb_0.1-14vmw.800.1.0.20513097, VMW_bootbank_vmw-ahci_2.0.14-1vmw.800.1.0.20513097, VMware_bootbank_bmcal-esxio_8.0.0-1.0.20513097, VMware_bootbank_bmcal_8.0.0-1.0.20513097, VMware_bootbank_clusterstore_8.0.0-1.0.20513097, VMware_bootbank_cpu-microcode_8.0.0-1.0.20513097, VMware_bootbank_crx_8.0.0-1.0.20513097, VMware_bootbank_drivervm-gpu_8.0.0-1.0.20513097, VMware_bootbank_elx-esx-libelxima.so_12.0.1200.0-6vmw.800.1.0.20513097, VMware_bootbank_esx-base_8.0.0-1.0.20513097, VMware_bootbank_esx-dvfilter-generic-fastpath_8.0.0-1.0.20513097, VMware_bootbank_esx-ui_2.5.1-20374953, VMware_bootbank_esx-update_8.0.0-1.0.20513097, VMware_bootbank_esx-xserver_8.0.0-1.0.20513097, VMware_bootbank_esxio-base_8.0.0-1.0.20513097, VMware_bootbank_esxio-combiner-esxio_8.0.0-1.0.20513097, VMware_bootbank_esxio-combiner_8.0.0-1.0.20513097, VMware_bootbank_esxio-dvfilter-generic-fastpath_8.0.0-1.0.20513097, VMware_bootbank_esxio-update_8.0.0-1.0.20513097, VMware_bootbank_esxio_8.0.0-1.0.20513097, VMware_bootbank_gc-esxio_8.0.0-1.0.20513097, VMware_bootbank_gc_8.0.0-1.0.20513097, VMware_bootbank_loadesx_8.0.0-1.0.20513097, VMware_bootbank_loadesxio_8.0.0-1.0.20513097, VMware_bootbank_lsuv2-hpv2-hpsa-plugin_1.0.0-3vmw.800.1.0.20513097, VMware_bootbank_lsuv2-intelv2-nvme-vmd-plugin_2.7.2173-2vmw.800.1.0.20513097, VMware_bootbank_lsuv2-lsiv2-drivers-plugin_1.0.0-12vmw.800.1.0.20513097, VMware_bootbank_lsuv2-nvme-pcie-plugin_1.0.0-1vmw.800.1.0.20513097, VMware_bootbank_lsuv2-oem-dell-plugin_1.0.0-2vmw.800.1.0.20513097, VMware_bootbank_lsuv2-oem-lenovo-plugin_1.0.0-2vmw.800.1.0.20513097, VMware_bootbank_lsuv2-smartpqiv2-plugin_1.0.0-8vmw.800.1.0.20513097, VMware_bootbank_native-misc-drivers-esxio_8.0.0-1.0.20513097, VMware_bootbank_native-misc-drivers_8.0.0-1.0.20513097, VMware_bootbank_qlnativefc_5.2.46.0-3vmw.800.1.0.20513097, VMware_bootbank_trx_8.0.0-1.0.20513097, VMware_bootbank_vdfs_8.0.0-1.0.20513097, VMware_bootbank_vmware-esx-esxcli-nvme-plugin-esxio_1.2.0.52-1vmw.800.1.0.20513097, VMware_bootbank_vmware-esx-esxcli-nvme-plugin_1.2.0.52-1vmw.800.1.0.20513097, VMware_bootbank_vsan_8.0.0-1.0.20513097, VMware_bootbank_vsanhealth_8.0.0-1.0.20513097, VMware_locker_tools-light_12.0.6.20104755-20513097

VIBs Removed: VMW_bootbank_atlantic_1.0.3.0-8vmw.702.0.0.17867351, VMW_bootbank_bnxtnet_216.0.50.0-34vmw.702.0.20.18426014, VMW_bootbank_bnxtroce_216.0.58.0-20vmw.702.0.20.18426014, VMW_bootbank_brcmfcoe_12.0.1500.1-2vmw.702.0.0.17867351, VMW_bootbank_brcmnvmefc_12.8.298.1-1vmw.702.0.0.17867351, VMW_bootbank_elxiscsi_12.0.1200.0-8vmw.702.0.0.17867351, VMW_bootbank_elxnet_12.0.1250.0-5vmw.702.0.0.17867351, VMW_bootbank_i40enu_1.8.1.137-1vmw.702.0.20.18426014, VMW_bootbank_iavmd_2.0.0.1152-1vmw.702.0.0.17867351, VMW_bootbank_icen_1.0.0.10-1vmw.702.0.0.17867351, VMW_bootbank_igbn_1.4.11.2-1vmw.702.0.0.17867351, VMW_bootbank_irdman_1.3.1.19-1vmw.702.0.0.17867351, VMW_bootbank_iser_1.1.0.1-1vmw.702.0.0.17867351, VMW_bootbank_ixgben_1.7.1.35-1vmw.702.0.0.17867351, VMW_bootbank_lpfc_12.8.298.3-2vmw.702.0.20.18426014, VMW_bootbank_lpnic_11.4.62.0-1vmw.702.0.0.17867351, VMW_bootbank_lsi-mr3_7.716.03.00-1vmw.702.0.0.17867351, VMW_bootbank_lsi-msgpt2_20.00.06.00-3vmw.702.0.0.17867351, VMW_bootbank_lsi-msgpt35_17.00.02.00-1vmw.702.0.0.17867351, VMW_bootbank_lsi-msgpt3_17.00.10.00-2vmw.702.0.0.17867351, VMW_bootbank_mtip32xx-native_3.9.8-1vmw.702.0.0.17867351, VMW_bootbank_ne1000_0.8.4-11vmw.702.0.0.17867351, VMW_bootbank_nenic_1.0.33.0-1vmw.702.0.0.17867351, VMW_bootbank_nfnic_4.0.0.63-1vmw.702.0.0.17867351, VMW_bootbank_nhpsa_70.0051.0.100-2vmw.702.0.0.17867351, VMW_bootbank_nmlx4-core_3.19.16.8-2vmw.702.0.0.17867351, VMW_bootbank_nmlx4-en_3.19.16.8-2vmw.702.0.0.17867351, VMW_bootbank_nmlx4-rdma_3.19.16.8-2vmw.702.0.0.17867351, VMW_bootbank_nmlx5-core_4.19.16.10-1vmw.702.0.0.17867351, VMW_bootbank_nmlx5-rdma_4.19.16.10-1vmw.702.0.0.17867351, VMW_bootbank_ntg3_4.1.5.0-0vmw.702.0.0.17867351, VMW_bootbank_nvme-pcie_1.2.3.11-1vmw.702.0.0.17867351, VMW_bootbank_nvmerdma_1.0.2.1-1vmw.702.0.0.17867351, VMW_bootbank_nvmxnet3-ens_2.0.0.22-1vmw.702.0.0.17867351, VMW_bootbank_nvmxnet3_2.0.0.30-1vmw.702.0.0.17867351, VMW_bootbank_pvscsi_0.1-2vmw.702.0.0.17867351, VMW_bootbank_qcnic_1.0.15.0-11vmw.702.0.0.17867351, VMW_bootbank_qedentv_3.40.5.53-20vmw.702.0.20.18426014, VMW_bootbank_qedrntv_3.40.5.53-17vmw.702.0.20.18426014, VMW_bootbank_qfle3_1.0.67.0-14vmw.702.0.0.17867351, VMW_bootbank_qfle3f_1.0.51.0-19vmw.702.0.0.17867351, VMW_bootbank_qfle3i_1.0.15.0-12vmw.702.0.0.17867351, VMW_bootbank_qflge_1.1.0.11-1vmw.702.0.0.17867351, VMW_bootbank_rste_2.0.2.0088-7vmw.702.0.0.17867351, VMW_bootbank_sfvmk_2.4.0.2010-4vmw.702.0.0.17867351, VMW_bootbank_smartpqi_70.4000.0.100-6vmw.702.0.0.17867351, VMW_bootbank_vmkata_0.1-1vmw.702.0.0.17867351, VMW_bootbank_vmkfcoe_1.0.0.2-1vmw.702.0.0.17867351, VMW_bootbank_vmkusb-nic-fling_1.8-3vmw.702.0.20.47140841, VMW_bootbank_vmkusb_0.1-4vmw.702.0.20.18426014, VMW_bootbank_vmw-ahci_2.0.9-1vmw.702.0.0.17867351, VMware_bootbank_clusterstore_7.0.2-0.30.19290878, VMware_bootbank_cpu-microcode_7.0.2-0.30.19290878, VMware_bootbank_crx_7.0.2-0.30.19290878, VMware_bootbank_elx-esx-libelxima.so_12.0.1200.0-4vmw.702.0.0.17867351, VMware_bootbank_esx-base_7.0.2-0.30.19290878, VMware_bootbank_esx-dvfilter-generic-fastpath_7.0.2-0.30.19290878, VMware_bootbank_esx-ui_1.34.8-17417756, VMware_bootbank_esx-update_7.0.2-0.30.19290878, VMware_bootbank_esx-xserver_7.0.2-0.30.19290878, VMware_bootbank_gc_7.0.2-0.30.19290878, VMware_bootbank_loadesx_7.0.2-0.30.19290878, VMware_bootbank_lsuv2-hpv2-hpsa-plugin_1.0.0-3vmw.702.0.0.17867351, VMware_bootbank_lsuv2-intelv2-nvme-vmd-plugin_2.0.0-2vmw.702.0.0.17867351, VMware_bootbank_lsuv2-lsiv2-drivers-plugin_1.0.0-5vmw.702.0.0.17867351, VMware_bootbank_lsuv2-nvme-pcie-plugin_1.0.0-1vmw.702.0.0.17867351, VMware_bootbank_lsuv2-oem-dell-plugin_1.0.0-1vmw.702.0.0.17867351, VMware_bootbank_lsuv2-oem-hp-plugin_1.0.0-1vmw.702.0.0.17867351, VMware_bootbank_lsuv2-oem-lenovo-plugin_1.0.0-1vmw.702.0.0.17867351, VMware_bootbank_lsuv2-smartpqiv2-plugin_1.0.0-6vmw.702.0.0.17867351, VMware_bootbank_native-misc-drivers_7.0.2-0.30.19290878, VMware_bootbank_qlnativefc_4.1.14.0-5vmw.702.0.0.17867351, VMware_bootbank_vdfs_7.0.2-0.30.19290878, VMware_bootbank_vmware-esx-esxcli-nvme-plugin_1.2.0.42-1vmw.702.0.0.17867351, VMware_bootbank_vsan_7.0.2-0.30.19290878, VMware_bootbank_vsanhealth_7.0.2-0.30.19290878, VMware_locker_tools-light_11.2.6.17901274-18295176

VIBs Skipped:And reboot ESXi after upgrade with cmd reboot. Good luck