Introduction

In the complex world of virtualization, developers often face the challenge of debugging guest operating systems and applications. A practical solution lies in converting virtual machine snapshots to memory dumps. This blog post delves into how you can efficiently use the vmss2core tool to transform a VM checkpoint, be it a snapshot or suspend file, into a core dump file, compatible with standard debuggers.

Step-by-Step Guide

Break down the process into clear, step-by-step instructions. Use bullet points or numbered lists for easier readability. Example:

Step 1: Create and download a virtual machine Snapshots .vmsn and .vmem

- Select the Problematic Virtual Machine

- In your VMware environment, identify and select the virtual machine experiencing issues.

- Replicate the Issue

- Attempt to replicate the problem within the virtual machine to ensure the snapshot captures the relevant state.

- Take a Snapshot

- Right-click on the virtual machine.

- Navigate to Snapshots → Take snapshot

- Enter a name for the snapshot.

- Ensure “Snapshot the Virtual Machine’s memory” is checked

- Click ‘CREATE’ to proceed.

- Access VM Settings

- Right-click on the virtual machine again.

- Select ‘Edit Settings’

- Navigate to Datastores

- Choose the virtual machine and click on ‘Datastores’.

- Click on the datastore name

- Download the Snapshot

- Locate the .vmsn ans .vmem files (VMware Snapshot file).

- Select the file, click ‘Download’, and save it locally.

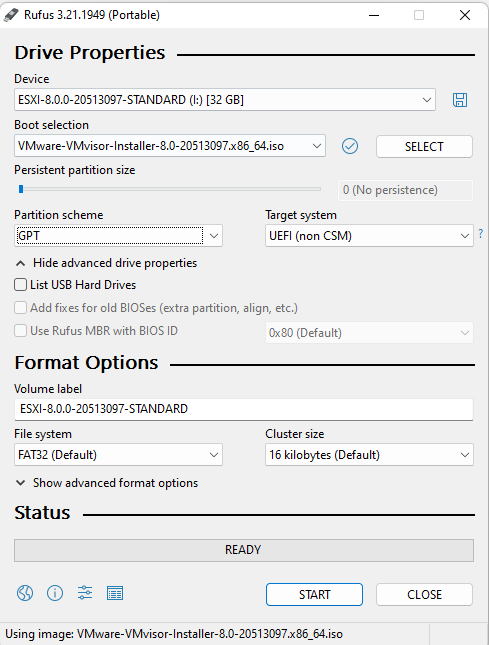

Step 2: Locate Your vmss2core Installation

- For Windows (32bit): Navigate to

C:\Program Files\VMware\VMware Workstation\ - For Windows (64bit): Go to

C:\Program Files(x86)\VMware\VMware Workstation\ - For Linux: Access

/usr/bin/ - For Mac OS: Find it in

/Library/Application Support/VMware Fusion/

Note: If vmss2core isn’t in these directories, download it from New Flings Link (use at your own risk).

Step 3: Run the vmss2core Tool

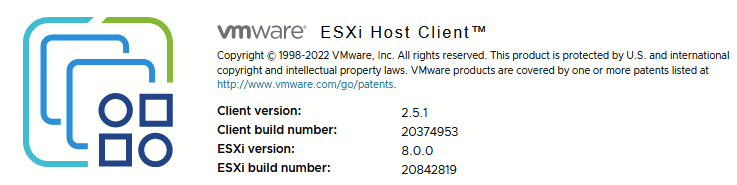

vmss2core.exe -N VM-Snapshot1.vmsn VM-Snapshot1.vmem vmss2core version 20800274 Copyright (C) 1998-2022 VMware, Inc. All rights reserved.

Started core writing.

Writing note section header.

Writing 1 memory section headers.

Writing notes.

... 100 MBs written.

... 200 MBs written.

... 300 MBs written.

... 400 MBs written.

... 500 MBs written.

... 600 MBs written.

... 700 MBs written.

... 800 MBs written.

... 900 MBs written.

... 1000 MBs written.

... 1100 MBs written.

... 1200 MBs written.

... 1300 MBs written.

... 1400 MBs written.

... 1500 MBs written.

... 1600 MBs written.

... 1700 MBs written.

... 1800 MBs written.

... 1900 MBs written.

... 2000 MBs written.

Finished writing core.- For general use:

vmss2core.exe -W [VM_name].vmsn [VM_name].vmem - For Windows 8/8.1, Server 2012, 2016, 2019:

vmss2core.exe -W8 [VM_name].vmsn [VM_name].vmem - For Linux:

./vmss2core-Linux64 -N [VM_name].vmsn[VM_name].vmemNote: Replace [VM_name] with your virtual machine’s name. The flag -W, -W8, or -N corresponds to the guest OS.

#vmss2core.exe

vmss2core version 20800274 Copyright (C) 1998-2022 VMware, Inc. All rights reserved. A tool to convert VMware checkpoint state files into formats that third party debugger tools understand. It can handle both suspend (.vmss) and snapshot (.vmsn) checkpoint state files (hereafter referred to as a 'vmss file') as well as both monolithic and non-monolithic (separate .vmem file) encapsulation of checkpoint state data. Usage: GENERAL: vmss2core [[options] | [-l linuxoffsets options]] \ <vmss file> [<vmem file>] The "-l" option specifies offsets (a stringset) within the Linux kernel data structures, which is used by -P and -N modes. It is ignored with other modes. Please use "getlinuxoffsets" to automatically generate the correct stringset value for your kernel, see README.txt for additional information. Without options one vmss.core<N> per vCPU with linear view of memory is generated. Other types of core files and output can be produced with these options: -q Quiet(er) operation. -M Create core file with physical memory view (vmss.core). -l str Offset stringset expressed as 0xHEXNUM,0xHEXNUM,... . -N Red Hat crash core file for arbitrary Linux version described by the "-l" option (vmss.core). -N4 Red Hat crash core file for Linux 2.4 (vmss.core). -N6 Red Hat crash core file for Linux 2.6 (vmss.core). -O <x> Use <x> as the offset of the entrypoint. -U <i> Create linear core file for vCPU <i> only. -P Print list of processes in Linux VM. -P<pid> Create core file for Linux process <pid> (core.<pid>). -S Create core for 64-bit Solaris (vmcore.0, unix.0). Optionally specify the version: -S112 -S64SYM112 for 11.2. -S32 Create core for 32-bit Solaris (vmcore.0, unix.0). -S64SYM Create text symbols for 64-bit Solaris (solaris.text). -S32SYM Create text symbols for 32-bit Solaris (solaris.text). -W Create WinDbg file (memory.dmp) with commonly used build numbers ("2195" for Win32, "6000" for Win64). -W<num> Create WinDbg file (memory.dmp), with <num> as the build number (for example: "-W2600"). -WK Create a Windows kernel memory only dump file (memory.dmp). -WDDB<num> or -W8DDB<num> Create WinDbg file (memory.dmp), with <num> as the debugger data block address in hex (for example: "-W12ac34de"). -WSCAN Scan all of memory for Windows debugger data blocks (instead of just low 256 MB). -W8 Generate a memory dump file from a suspended Windows 8 VM. -X32 <mach_kernel> Create core for 32-bit Mac OS. -X64 <mach_kernel> Create core for 64-bit Mac OS. -F Create core for an EFI firmware exception. -F<adr> Create core for an EFI firmware exception with system context at the given guest virtual address.