How to speedup BOOT time in Cisco UCS M5?

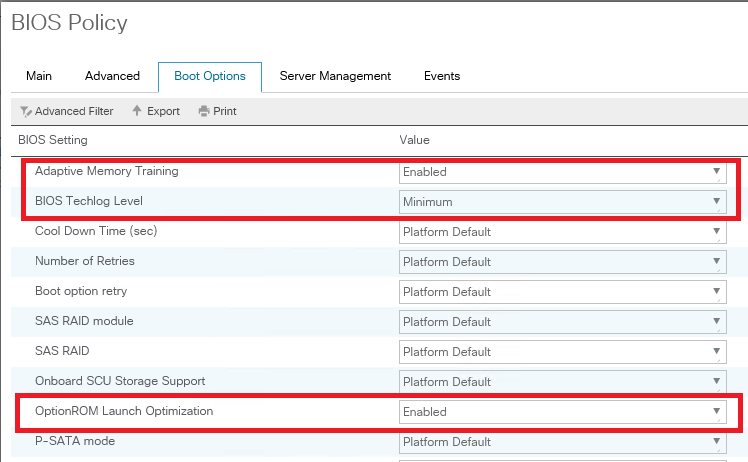

Adaptive Memory Training drop-down list

When this token is enabled, the BIOS saves the memory training results (optimized timing/voltage values) along with CPU/memory configuration information and reuses them on subsequent reboots to save boot time. The saved memory training results are used only if the reboot happens within 24 hours of the last save operation. This can be one of the following:

- Disabled—Adaptive Memory Training is disabled.

- Enabled—Adaptive Memory Training is enabled.

- Platform Default—The BIOS uses the value for this attribute contained in the BIOS defaults for the server type and vendor.

BIOS Techlog Level

Enabling this token allows the BIOS Tech log output to be controlled at more a granular level. This reduces the number of BIOS Tech log messages that are redundant, or of little use. This can be one of the following:

This option denotes the type of messages in BIOS tech log file. The log file can be one of the following types:

- Minimum – Critical messages will be displayed in the log file.

- Normal – Warning and loading messages will be displayed in the log file.

- Maximum – Normal and information related messages will be displayed in the log file.

Note: This option is mainly for internal debugging purposes.

Note: To disable the Fast Boot option, the end user must set the following tokens as mentioned below:

OptionROM Launch Optimization

The Option ROM launch is controlled at the PCI Slot level, and is enabled by default. In configurations that consist of a large number of network controllers and storage HBAs having Option ROMs, all the Option ROMs may get launched if the PCI Slot Option ROM Control is enabled for all. However, only a subset of controllers may be used in the boot process. When this token is enabled, Option ROMs are launched only for those controllers that are present in boot policy. This can be one of the following:

- Disabled—OptionROM Launch Optimization is disabled.

- Enabled—OptionROM Launch Optimization is enabled.

- Platform Default—The BIOS uses the value for this attribute contained in the BIOS defaults for the server type and vendor.

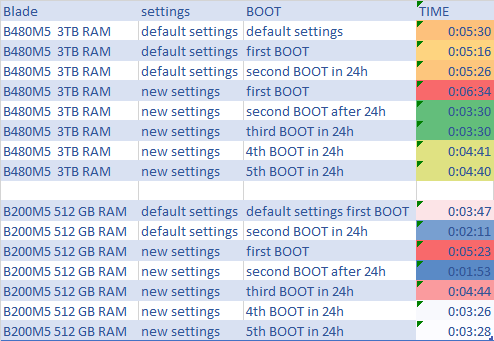

Results

First BOOT after New settings is longer about 1-2 minutes.

Then We can save about 2 minutes on each BOOT from Second BOOT with 3TB RAM B480M5: