I upgraded vCenter to version 7 successfully but failed when it came to updating my hosts from 6.7 to 7.

I got some warning stating PCI devices were incompatible but tried anyways. Turns out that failed, my Mellanox ConnectX 2 wasn’t showing up as an available physical NIC.

At first It was necessary to have VID/DID device code for MT26448 [ConnectX EN 10GigE , PCIe 2.0 5GT/s].

| Partner | Product | Driver | VID | DID |

| Mellanox | MT26448 [ConnectX EN 10GigE , PCIe 2.0 5GT/s] | mlx4_core | 15b3 | 6750 |

Deprecated devices supported by VMKlinux drivers

Devices that were only supported in 6.7 or earlier by a VMKlinux inbox driver. These devices are no longer supported because all support for VMKlinux drivers and their devices have been completely removed in 7.0.| Partner | Product | Driver | VID |

|---|---|---|---|

| Mellanox | MT26428 [ConnectX VPI - 10GigE / IB QDR, PCIe 2.0 5GT/s] | mlx4_core | 15b3 |

| Mellanox | MT26488 [ConnectX VPI PCIe 2.0 5GT/s - IB DDR / 10GigE Virtualization+] | mlx4_core | 15b3 |

| Mellanox | MT26438 [ConnectX VPI PCIe 2.0 5GT/s - IB QDR / 10GigE Virtualization+] | mlx4_core | 15b3 |

| Mellanox | MT25408 [ConnectX VPI - 10GigE / IB SDR] | mlx4_core | 15b3 |

| Mellanox | MT27560 Family | mlx4_core | 15b3 |

| Mellanox | MT27551 Family | mlx4_core | 15b3 |

| Mellanox | MT27550 Family | mlx4_core | 15b3 |

| Mellanox | MT27541 Family | mlx4_core | 15b3 |

| Mellanox | MT27540 Family | mlx4_core | 15b3 |

| Mellanox | MT27531 Family | mlx4_core | 15b3 |

| Mellanox | MT25448 [ConnectX EN 10GigE, PCIe 2.0 2.5GT/s] | mlx4_core | 15b3 |

| Mellanox | MT25408 [ConnectX EN 10GigE 10GBaseT, PCIe Gen2 5GT/s] | mlx4_core | 15b3 |

| Mellanox | MT27561 Family | mlx4_core | 15b3 |

| Mellanox | MT26468 [ConnectX EN 10GigE, PCIe 2.0 5GT/s Virtualization+] | mlx4_core | 15b3 |

| Mellanox | MT26418 [ConnectX VPI - 10GigE / IB DDR, PCIe 2.0 5GT/s] | mlx4_core | 15b3 |

| Mellanox | MT27510 Family | mlx4_core | 15b3 |

| Mellanox | MT26488 [ConnectX VPI PCIe 2.0 5GT/s - IB DDR / 10GigE Virtualization+] | mlx4_core | 15b3 |

| Mellanox | MT26448 [ConnectX EN 10GigE , PCIe 2.0 5GT/s] | mlx4_core | 15b3 |

| Mellanox | MT25418 [ConnectX VPI - 10GigE / IB DDR, PCIe 2.0 2.5GT/s] | mlx4_core | 15b3 |

| Mellanox | MT27530 Family | mlx4_core | 15b3 |

| Mellanox | MT27521 Family | mlx4_core | 15b3 |

| Mellanox | MT27511 Family | mlx4_core | 15b3 |

| Mellanox | MT25408 [ConnectX EN 10GigE 10BASE-T, PCIe 2.0 2.5GT/s] | mlx4_core | 15b3 |

| Mellanox | MT25408 [ConnectX IB SDR Flash Recovery] | mlx4_core | 15b3 |

| Mellanox | MT25400 Family [ConnectX-2 Virtual Function] | mlx4_core | 15b3 |

Deprecated devices supported by VMKlinux drivers – full table list

How to fix it? I tuned small script ESXi7-enable-nmlx4_co.v00.sh to DO IT. Notes:

- edit patch to Your Datastore example is /vmfs/volumes/ISO

- nmlx4_co.v00.orig is backup for original nmlx4_co.v00

- New VIB is without signatures – ALERT message will be in log during reboot:

- ALERT: Failed to verify signatures of the following vib

- ESXi reboot is needed for load new driver

cp /bootbank/nmlx4_co.v00 /vmfs/volumes/ISO/nmlx4_co.v00.orig

cp /bootbank/nmlx4_co.v00 /vmfs/volumes/ISO/n.tar

cd /vmfs/volumes/ISO/

vmtar -x n.tar -o output.tar

rm -f n.tar

mkdir tmp-network

mv output.tar tmp-network/output.tar

cd tmp-network

tar xf output.tar

rm output.tar

echo '' >> /vmfs/volumes/ISO/tmp-network/etc/vmware/default.map.d/nmlx4_core.map

echo 'regtype=native,bus=pci,id=15b36750..............,driver=nmlx4_core' >> /vmfs/volumes/ISO/tmp-network/etc/vmware/default.map.d/nmlx4_core.map

cat /vmfs/volumes/ISO/tmp-network/etc/vmware/default.map.d/nmlx4_core.map

echo ' 6750 Mellanox ConnectX-2 Dual Port 10GbE ' >> /vmfs/volumes/ISO/tmp-network/usr/share/hwdata/default.pciids.d/nmlx4_core.ids

cat /vmfs/volumes/ISO/tmp-network/usr/share/hwdata/default.pciids.d/nmlx4_core.ids

tar -cf /vmfs/volumes/ISO/FILE.tar *

cd /vmfs/volumes/ISO/

vmtar -c FILE.tar -o output.vtar

gzip output.vtar

mv output.vtar.gz nmlx4_co.v00

rm FILE.tar

cp /vmfs/volumes/ISO/nmlx4_co.v00 /bootbank/nmlx4_co.v00

Scripts add HW ID support in file nmlx4_core.map:

*********************************************************************

/vmfs/volumes/ISO/tmp-network/etc/vmware/default.map.d/nmlx4_core.map

*********************************************************************

regtype=native,bus=pci,id=15b301f6..............,driver=nmlx4_core

regtype=native,bus=pci,id=15b301f8..............,driver=nmlx4_core

regtype=native,bus=pci,id=15b31003..............,driver=nmlx4_core

regtype=native,bus=pci,id=15b31004..............,driver=nmlx4_core

regtype=native,bus=pci,id=15b31007..............,driver=nmlx4_core

regtype=native,bus=pci,id=15b3100715b30003......,driver=nmlx4_core

regtype=native,bus=pci,id=15b3100715b30006......,driver=nmlx4_core

regtype=native,bus=pci,id=15b3100715b30007......,driver=nmlx4_core

regtype=native,bus=pci,id=15b3100715b30008......,driver=nmlx4_core

regtype=native,bus=pci,id=15b3100715b3000c......,driver=nmlx4_core

regtype=native,bus=pci,id=15b3100715b3000d......,driver=nmlx4_core

regtype=native,bus=pci,id=15b36750..............,driver=nmlx4_core

------------------------->Last Line is FIXAnd add HW ID support in file nmlx4_core.ids:

**************************************************************************************

/vmfs/volumes/FreeNAS/ISO/tmp-network/usr/share/hwdata/default.pciids.d/nmlx4_core.ids

**************************************************************************************

#

# This file is mechanically generated. Any changes you make

# manually will be lost at the next build.

#

# Please edit <driver>_devices.py file for permanent changes.

#

# Vendors, devices and subsystems.

#

# Syntax (initial indentation must be done with TAB characters):

#

# vendor vendor_name

# device device_name <-- single TAB

# subvendor subdevice subsystem_name <-- two TABs

15b3 Mellanox Technologies

01f6 MT27500 [ConnectX-3 Flash Recovery]

01f8 MT27520 [ConnectX-3 Pro Flash Recovery]

1003 MT27500 Family [ConnectX-3]

1004 MT27500/MT27520 Family [ConnectX-3/ConnectX-3 Pro Virtual Function]

1007 MT27520 Family [ConnectX-3 Pro]

15b3 0003 ConnectX-3 Pro VPI adapter card; dual-port QSFP; FDR IB (56Gb/s) and 40GigE (MCX354A-FCC)

15b3 0006 ConnectX-3 Pro EN network interface card 40/56GbE dual-port QSFP(MCX314A-BCCT )

15b3 0007 ConnectX-3 Pro EN NIC; 40GigE; dual-port QSFP (MCX314A-BCC)

15b3 0008 ConnectX-3 Pro VPI adapter card; single-port QSFP; FDR IB (56Gb/s) and 40GigE (MCX353A-FCC)

15b3 000c ConnectX-3 Pro EN NIC; 10GigE; dual-port SFP+ (MCX312B-XCC)

15b3 000d ConnectX-3 Pro EN network interface card; 10GigE; single-port SFP+ (MCX311A-XCC)

6750 Mellanox ConnectX-2 Dual Port 10GbE

-------->Last Line is FIXAfter reboot I could see support for MT26448 [ConnectX EN 10GigE , PCIe 2.0 5GT/s].

Only ALERT: Failed to verify signatures of the following vib(s): [nmlx4-core].

2020-XX-XXTXX:XX:44.473Z cpu0:2097509)ALERT: Failed to verify signatures of the following vib(s): [nmlx4-core]. All tardisks validated

2020-XX-XXTXX:XX:47.909Z cpu1:2097754)Loading module nmlx4_core ...

2020-XX-XXTXX:XX:47.912Z cpu1:2097754)Elf: 2052: module nmlx4_core has license BSD

2020-XX-XXTXX:XX:47.921Z cpu1:2097754)<NMLX_INF> nmlx4_core: init_module called

2020-XX-XXTXX:XX:47.921Z cpu1:2097754)Device: 194: Registered driver 'nmlx4_core' from 42

2020-XX-XXTXX:XX:47.921Z cpu1:2097754)Mod: 4845: Initialization of nmlx4_core succeeded with module ID 42.

2020-XX-XXTXX:XX:47.921Z cpu1:2097754)nmlx4_core loaded successfully.

2020-XX-XXTXX:XX:47.951Z cpu1:2097754)<NMLX_INF> nmlx4_core: 0000:05:00.0: nmlx4_core_Attach - (nmlx4_core_main.c:2476) running

2020-XX-XXTXX:XX:47.951Z cpu1:2097754)DMA: 688: DMA Engine 'nmlx4_core' created using mapper 'DMANull'.

2020-XX-XXTXX:XX:47.951Z cpu1:2097754)DMA: 688: DMA Engine 'nmlx4_core' created using mapper 'DMANull'.

2020-XX-XXTXX:XX:47.951Z cpu1:2097754)DMA: 688: DMA Engine 'nmlx4_core' created using mapper 'DMANull'.

2020-XX-XXTXX:XX:49.724Z cpu1:2097754)<NMLX_INF> nmlx4_core: 0000:05:00.0: nmlx4_ChooseRoceMode - (nmlx4_core_main.c:382) Requested RoCE mode RoCEv1

2020-XX-XXTXX:XX:49.724Z cpu1:2097754)<NMLX_INF> nmlx4_core: 0000:05:00.0: nmlx4_ChooseRoceMode - (nmlx4_core_main.c:422) Requested RoCE mode is supported - choosing RoCEv1

2020-XX-XXTXX:XX:49.934Z cpu1:2097754)<NMLX_INF> nmlx4_core: 0000:05:00.0: nmlx4_CmdInitHca - (nmlx4_core_fw.c:1408) Initializing device with B0 steering support

2020-XX-XXTXX:XX:50.561Z cpu1:2097754)<NMLX_INF> nmlx4_core: 0000:05:00.0: nmlx4_InterruptsAlloc - (nmlx4_core_main.c:1744) Granted 38 MSIX vectors

2020-XX-XXTXX:XX:50.561Z cpu1:2097754)<NMLX_INF> nmlx4_core: 0000:05:00.0: nmlx4_InterruptsAlloc - (nmlx4_core_main.c:1766) Using MSIX

2020-XX-XXTXX:XX:50.781Z cpu1:2097754)Device: 330: Found driver nmlx4_core for device 0xxxxxxxxxxxxxxxxxxxxxxxSome 10 Gbps tuning testing looks great, between 2x ESXi 7.0 with 2x MT2644:

[ ID] Interval Transfer Bandwidth Retr

[ 4] 0.00-120.00 sec 131 GBytes 9380 Mbits/sec 0 sender

[ 4] 0.00-120.00 sec 131 GBytes 9380 Mbits/sec receiver

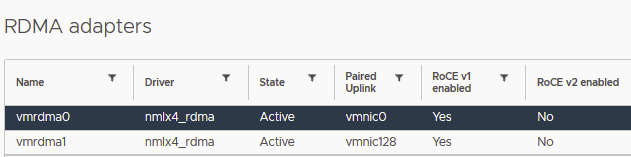

RoCEv1 is only supported, because:

- Support for RoCEv2 is above card – Mellanox ConnectX-3 Pro

- We can see RoCEv2 options in nmlx2_core driver, but when I enabled enable_rocev2 It is NOT working

[root@esxi~] esxcli system module parameters list -m nmlx4_core

Name Type Value Description

---------------------- ---- ----- -----------

enable_64b_cqe_eqe int Enable 64 byte CQEs/EQEs when the the FW supports this

enable_dmfs int Enable Device Managed Flow Steering

enable_qos int Enable Quality of Service support in the HCA

enable_rocev2 int Enable RoCEv2 mode for all devices

enable_vxlan_offloads int Enable VXLAN offloads when supported by NIC

log_mtts_per_seg int Log2 number of MTT entries per segment

log_num_mgm_entry_size int Log2 MGM entry size, that defines the number of QPs per MCG, for example: value 10 results in 248 QP per MGM entry

msi_x int Enable MSI-X

mst_recovery int Enable recovery mode(only NMST module is loaded)

rocev2_udp_port int Destination port for RoCEv2It is officialy NOT supported. Use it only in your HomeLAB. But We could save some money for new 10Gbps network cards.